Description

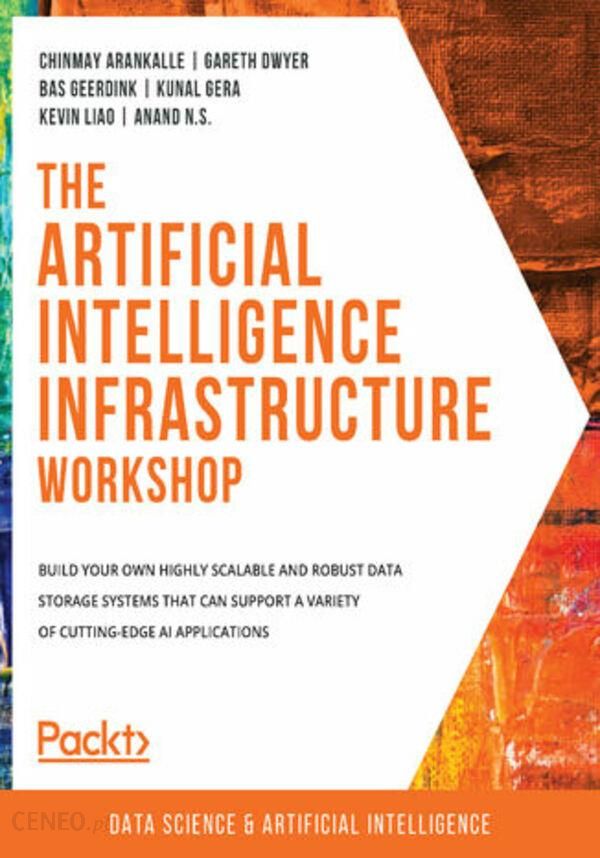

Explore how a data storage system works – from data ingestion to representationKey FeaturesUnderstand how artificial intelligence, machine learning, and deep learning are different from one anotherDiscover the data storage requirements of different AI apps using case studiesExplore popular data solutions such as Hadoop Distributed File System (HDFS) and Amazon Simple Storage Service (S3)Book DescriptionSocial networking sites see an average of 350 million uploads daily – a quantity impossible for humans to scan and analyze. Only AI can do this job at the required speed, and to leverage an AI application at its full potential, you need an efficient and scalable data storage pipeline. The Artificial Intelligence Infrastructure Workshop will teach you how to build and manage one.The Artificial Intelligence Infrastructure Workshop begins taking you through some real-world applications of AI. Youll explore the layers of a data lake and get to grips with security, scalability, and maintainability. With the help of hands-on exercises, youll learn how to define the requirements for AI applications in your organization. This AI book will show you how to select a database for your system and run common queries on databases such as MySQL, MongoDB, and Cassandra. Youll also design your own AI trading system to get a feel of the pipeline-based architecture. As you learn to implement a deep Q-learning algorithm to play the CartPole game, youll gain hands-on experience with PyTorch. Finally, youll explore ways to run machine learning models in production as part of an AI application.By the end of the book, youll have learned how to build and deploy your own AI software at scale, using various tools, API frameworks, and serialization methods.What you will learnGet to grips with the fundamentals of artificial intelligenceUnderstand the importance of data storage and architecture in AI applicationsBuild data storage and workflow management systems with open source toolsContainerize your AI applications with tools such as DockerDiscover commonly used data storage solutions and best practices for AI on Amazon Web Services (AWS)Use the AWS CLI and AWS SDK to perform common data tasksWho this book is forIf you are looking to develop the data storage skills needed for machine learning and AI and want to learn AI best practices in data engineering, this workshop is for you. Experienced programmers can use this book to advance their career in AI. Familiarity with programming, along with knowledge of exploratory data analysis and reading and writing files using Python will help you to understand the key concepts covered. Spis treści:The Artificial Intelligence Infrastructure WorkshopPrefaceAbout the BookAudienceAbout the ChaptersConventionsCode PresentationSetting up Your EnvironmentInstalling AnacondaInstalling Scikit-LearnInstalling gawkInstalling Apache SparkInstalling PySparkInstalling TweepyInstalling spaCyInstalling MySQLInstalling MongoDBInstalling CassandraInstalling Apache Spark and ScalaInstalling AirflowInstalling AWSRegistering Your AWS AccountCreating an IAM Role for Programmatic AWS AccessInstalling the AWS CLIInstalling an AWS Python SDK BotoInstalling MySQL ClientInstalling pytestInstalling MotoInstalling PyTorchInstalling GymInstalling DockerKubernetes MinikubeInstalling MavenInstalling JDKInstalling NetcatInstalling LibrariesAccessing the Code Files1. Data Storage FundamentalsIntroductionProblems Solved by Machine LearningImage Processing Detecting Cancer in Mammograms with Computer VisionText and Language Processing Google TranslateAudio Processing Automatically Generated SubtitlesTime Series AnalysisOptimizing the Storing and Processing of Data for Machine Learning ProblemsDiving into Text ClassificationLooking at TF-IDF VectorizationLooking at Terminology in Text Classification TasksExercise 1.01: Training a Machine Learning Model to Identify Clickbait HeadlinesDesigning for Scale Choosing the Right Architecture and HardwareOptimizing Hardware Processing Power, Volatile Memory, and Persistent StorageOptimizing Volatile MemoryOptimizing Persistent StorageOptimizing Cloud Costs Spot Instances and Reserved InstancesUsing Vectorized Operations to Analyze Data FastExercise 1.02: Applying Vectorized Operations to Entire MatricesActivity 1.01: Creating a Text Classifier for Movie ReviewsSummary2. Artificial Intelligence Storage RequirementsIntroductionStorage RequirementsThe Three Stages of Digital DataData LayersFrom Data Warehouse to Data LakeExercise 2.01: Designing a Layered Architecture for an AI SystemRequirements per Infrastructure LayerRaw DataSecurityBasic ProtectionThe AIC RatingRole-Based AccessEncryptionExercise 2.02: Defining the Security Requirements for Storing Raw DataScalabilityTime TravelRetentionMetadata and LineageHistorical DataSecurityScalabilityAvailabilityExercise 2.03: Analyzing the Availability of a Data StoreAvailability ConsequencesTime TravelLocality of DataMetadata and LineageStreaming DataSecurityPerformanceAvailabilityRetentionExercise 2.04: Setting the Requirements for Data RetentionAnalytics DataPerformanceCost-EfficiencyQualityModel Development and TrainingSecurityAvailabilityRetentionActivity 2.01: Requirements Engineering for a Data-Driven ApplicationSummary3. Data PreparationIntroductionETLData Processing TechniquesExercise 3.01: Creating a Simple ETL Bash ScriptTraditional ETL with Dedicated ToolingDistributed, Parallel Processing with Apache SparkExercise 3.02: Building an ETL Job Using SparkActivity 3.01: Using PySpark for a Simple ETL Job to Find Netflix Shows for All AgesSource to Raw: Importing Data from Source SystemsRaw to Historical: Cleaning DataRaw to Historical: Modeling DataHistorical to Analytics: Filtering and Aggregating DataHistorical to Analytics: Flattening DataAnalytics to Model: Feature EngineeringAnalytics to Model: Splitting DataStreaming DataWindowsEvent TimeLate Events and WatermarksExercise 3.03: Streaming Data Processing with SparkActivity 3.02: Counting the Words in a Twitter Data Stream to Determine the Trending TopicsSummary4. The Ethics of AI Data StorageIntroductionCase Study 1: Cambridge AnalyticaSummary and TakeawaysCase Study 2: Amazons AI Recruiting ToolImbalanced Training SetsSummary and TakeawaysCase Study 3: COMPAS SoftwareSummary and TakeawaysFinding Built-In Bias in Machine Learning ModelsExercise 4.01: Observing Prejudices and Biases in Word EmbeddingsExercise 4.02: Testing Our Sentiment Classifier on Movie ReviewsActivity 4.01: Finding More Latent PrejudicesSummary5. Data Stores: SQL and NoSQL DatabasesIntroductionDatabase ComponentsSQL DatabasesMySQLAdvantages of MySQLDisadvantages of MySQLQuery LanguageTerminologyData Definition Language (DDL)Data Manipulation Language (DML)Data Control Language (DCL)Transaction Control Language (TCL)Data RetrievalSQL ConstraintsExercise 5.01: Building a Relational Database for the FashionMart StoreData ModelingNormalizationDimensional Data ModelingPerformance Tuning and Best PracticesActivity 5.01: Managing the Inventory of an E-Commerce Website Using a MySQL QueryNoSQL DatabasesNeed for NoSQLConsistency Availability Partitioning (CAP) TheoremMongoDBAdvantages of MongoDBDisadvantages of MongoDBQuery LanguageTerminologyExercise 5.02: Managing the Inventory of an E-Commerce Website Using a MongoDB QueryData ModelingLack of JoinsJoinsPerformance Tuning and Best PracticesActivity 5.02: Data Model to Capture User InformationCassandraAdvantages of CassandraDisadvantages of CassandraDealing with Denormalizations in CassandraQuery LanguageTerminologyExercise 5.03: Managing Visitors of an E-Commerce Site Using CassandraData ModelingColumn Family DesignDistributing Data Evenly across ClustersConsidering Write-Heavy ScenariosPerformance Tuning and Best PracticesActivity 5.03: Managing Customer Feedback Using CassandraExploring the Collective Knowledge of DatabasesSummary6. Big Data File FormatsIntroductionCommon Input FilesCSV Comma-Separated ValuesJSON JavaScript Object NotationChoosing the Right Format for Your DataOrientation Row-Based or Column-BasedRow-BasedColumn-BasedPartitionsSchema EvolutionCompressionIntroduction to File FormatsParquetExercise 6.01: Converting CSV and JSON Files into the Parquet FormatAvroExercise 6.02: Converting CSV and JSON Files into the Avro FormatORCExercise 6.03: Converting CSV and JSON Files into the ORC FormatQuery PerformanceActivity 6.01: Selecting an Appropriate Big Data File Format for Game LogsSummary7. Introduction to Analytics Engine (Spark) for Big DataIntroductionApache SparkFundamentals and TerminologyHow Does Spark Work?Apache Spark and DatabricksExercise 7.01: Creating Your Databricks NotebookUnderstanding Various Spark TransformationsExercise 7.02: Applying Spark Transformations to Analyze the Temperature in CaliforniaUnderstanding Various Spark ActionsSpark PipelineExercise 7.03: Applying Spark Actions to the Gettysburg AddressActivity 7.01: Exploring and Processing a Movie Locations Database Using Transformations and ActionsBest PracticesSummary8. Data System Design ExamplesIntroductionThe Importance of System DesignComponents to Consider in System DesignFeaturesHardwareDataArchitectureSecurityScalingExamining a Pipeline Design for an AI SystemReproducibility How Pipelines Can Help Us Keep Track of Each ComponentExercise 8.01: Designing an Automatic Trading SystemMaking a Pipeline System Highly AvailableExercise 8.02: Adding Queues to a System to Make It Highly AvailableActivity 8.01: Building the Complete System with Pipelines and QueuesSummary9. Workflow Management for AIIntroductionCreating Your Data PipelineExercise 9.01: Implementing a Linear Pipeline to Get the Top 10 Trending VideosExercise 9.02: Creating a Nonlinear Pipeline to Get the Daily Top 10 Trending Video CategoriesChallenges in Managing Processes in the Real WorldAutomationFailure HandlingRetry MechanismExercise 9.03: Creating a Multi-Stage Data PipelineAutomating a Data PipelineExercise 9.04: Automating a Multi-Stage Data Pipeline Using a Bash ScriptAutomating Asynchronous Data PipelinesExercise 9.05: Automating an Asynchronous Data PipelineWorkflow Management with AirflowExercise 9.06: Creating a DAG for Our Data Pipeline Using AirflowActivity 9.01: Creating a DAG in Airflow to Calculate the Ratio of Likes-Dislikes for Each CategorySummary10. Introduction to Data Storage on Cloud Services (AWS)IntroductionInteracting with Cloud StorageExercise 10.01: Uploading a File to an AWS S3 Bucket Using AWS CLIExercise 10.02: Copying Data from One Bucket to Another BucketExercise 10.03: Downloading Data from Your S3 BucketExercise 10.04: Creating a Pipeline Using AWS SDK Boto3 and Uploading the Result to S3Getting Started with Cloud Relational DatabasesExercise 10.05: Creating an AWS RDS Instance via the AWS ConsoleExercise 10.06: Accessing and Managing the AWS RDS InstanceIntroduction to NoSQL Data Stores on the CloudKey-Value Data StoresDocument Data StoresColumnar Data StoreGraph Data StoreData in Document FormatActivity 10.01: Transforming a Table Schema into Document Format and Uploading It to Cloud StorageSummary11. Building an Artificial Intelligence AlgorithmIntroductionMachine Learning AlgorithmsModel TrainingClosed-Form SolutionNon-Closed-Form SolutionsGradient DescentExercise 11.01: Implementing a Gradient Descent Algorithm in NumPyGetting Started with PyTorchExercise 11.02: Gradient Descent with PyTorchMini-Batch SGD with PyTorchExercise 11.03: Implementing Mini-Batch SGD with PyTorchBuilding a Reinforcement Learning Algorithm to Play a GameExercise 11.04: Implementing a Deep Q-Learning Algorithm in PyTorch to Solve the Classic Cart Pole ProblemActivity 11.01: Implementing a Double Deep Q-Learning Algorithm to Solve the Cart Pole ProblemSummary12. Productionizing Your AI ApplicationsIntroductionpickle and FlaskExercise 12.01: Creating a Machine Learning Model API with pickle and Flask That Predicts Survivors of the TitanicActivity 12.01: Predicting the Class of a Passenger on the TitanicDeploying Models to ProductionDockerKubernetesExercise 12.02: Deploying a Dockerized Machine Learning API to a Kubernetes ClusterActivity 12.02: Deploying a Machine Learning Model to a Kubernetes Cluster to Predict the Class of Titanic PassengersModel Execution in Streaming Data ApplicationsPMMLApache FlinkExercise 12.03: Exporting a Model to PMML and Loading it in the Flink Stream Processing Engine for Real-time ExecutionActivity 12.03: Predicting the Class of Titanic Passengers in Real TimeSummaryAppendix1. Data Storage FundamentalsActivity 1.01: Creating a Text Classifier for Movie Reviews2. Artificial Intelligence Storage RequirementsActivity 2.01: Requirements Engineering for a Data-Driven Application3. Data PreparationActivity 3.01: Using PySpark for a Simple ETL Job to Find Netflix Shows for All AgesActivity 3.02: Counting the Words in a Twitter Data Stream to Determine the Trending Topics4. Ethics of AI Data StorageActivity 4.01: Finding More Latent Prejudices5. Data Stores: SQL and NoSQL DatabasesActivity 5.01: Managing the Inventory of an E-Commerce Website Using a MySQL QueryActivity 5.02: Data Model to Capture User InformationActivity 5.03: Managing Customer Feedback Using Cassandra6. Big Data File FormatsActivity 6.01: Selecting an Appropriate Big Data File Format for Game Logs7. Introduction to Analytics Engine (Spark) for Big DataActivity 7.01: Exploring and Processing a Movie Locations Database by Using Sparks Transformations and Actions8. Data System Design ExamplesActivity 8.01: Building the Complete System with Pipelines and Queues9. Workflow Management for AIActivity 9.01: Creating a DAG in Airflow to Calculate the Ratio of Likes-Dislikes for Each Category10. Introduction to Data Storage on Cloud Services (AWS)Activity 10.01: Transforming a Table Schema into Document Format and Uploading It to Cloud Storage11. Building an Artificial Intelligence AlgorithmActivity 11.01: Implementing a Double Deep Q-Learning Algorithm to Solve the Cart Pole Problem12. Productionizing Your AI ApplicationsActivity 12.01: Predicting the Class of a Passenger on the TitanicActivity 12.02: Deploying a Machine Learning Model to a Kubernetes Cluster to Predict the Class of Titanic PassengersActivity 12.03: Predicting the Class of Titanic Passengers in Real Time

E-informatyka

umig strzelin, jarosław augustyniak, archiwum kursów walut, konwergencja, efekty korozji, srebrne sztućce, płuca palacza, dubeltówka, mapa offline, n po, wyniki alab, wojewódzki sąd administracyjny gliwice

yyyyy